The Faster Horse Problem in AI

Embracing the Emergent Behaviors of Large-Scale Models

The Faster Horse Problem

In recent weeks, I’ve talked about the “The Faster Horse Problem” in AI: a consequence of industry’s prioritization of incrementalist innovation for short-term financial gain over the revolutionary applications that will deliver enduring societal transformation. A year into the AI gold rush, industry has become fascinated by the notion of automating specific human tasks and jobs, employing rhetorics of “AI copilots” and “human replacement.”

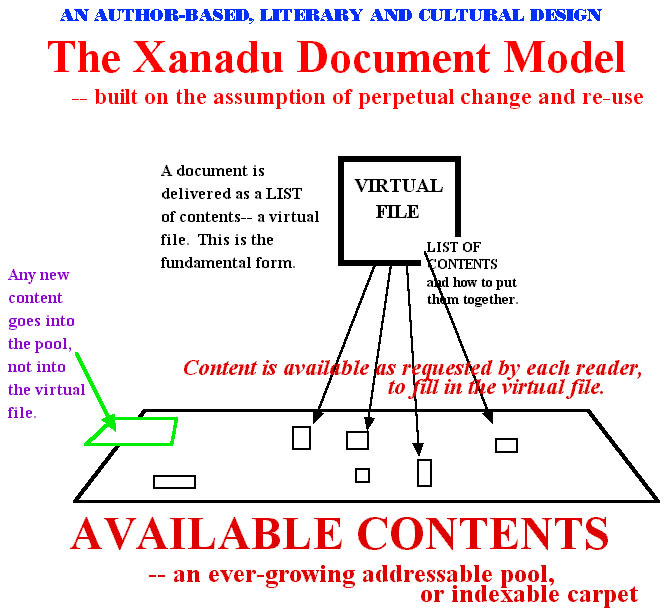

Yet when we frame conversations in terms of how existing, human-centric systems can be "supercharged" or "made more efficient" by AI, we fail to leave room for the creative solutions that re-architect the status quo. We are asking for faster horses instead of cars. Through caricature-like simulacra of human behavior, these skeuomorphic design principles that mimic real-world dynamics on digital interfaces are the same ones that took physical paper and transposed it onto a personal computer. Instead of interrogating what behaviors emerge from novel platforms for computation, industry has a history of designing only with real-world physics in-mind, until programs like Xanadu, Alexa, and now, ChatGPT break mental models for what’s achievable.

As the tech sector flocks to its next platform shift, such didactic approaches to innovation could be stifling. For instance, why does an LLM have to augment or replace a human in a given workflow? Can another LLM audit the outputs of an LLM that is used by a human? Can swarms of LLMs work collaboratively with intermittent human (or machine) input on a process overseen by another model? Do LLMs even need to know whether inputs or outputs were produced by human or machine counterparts?

As Zachary Lipton, Chief Scientific/Technology Officer at Abridge and Assistant Professor at Carnegie Mellon recently explained to Senate leaders, the relationship between the number of model parameters and model capabilities continues to be an unsolved mystery for the field. Instead of focusing on characterizing which human jobs are likely replaced by AI, we should direct our attention to how the emergent behaviors of large-scale AI models can re-imagine labor and tasks themselves, re-structuring workflows and cost structures at the most fundamental levels – in the same way that the first PC did for computing and streaming did for television.

And, what if hallucinations are a feature and not a bug? Skeuomorphism may have gifted us Microsoft Word, but the techno-humanist imagination gave us products like Xanadu, Alexa, and now, ChatGPT. The emergent behaviors of technology lie at the crux of these discontinuous innovations.

AI can solve our problems in user experience before we’ve realized they exist. If we allow it.

The cold, hard truth: entrepreneurs commercializing technology over a 2-3 year time horizon (e.g., such as those that raise venture capital) will need to build with existing workflows and human roles in mind. But the generational companies will not rest on those versions of the world, and will instead leverage these human wedges into the status quo as stepping stones towards a complete re-wiring of society.

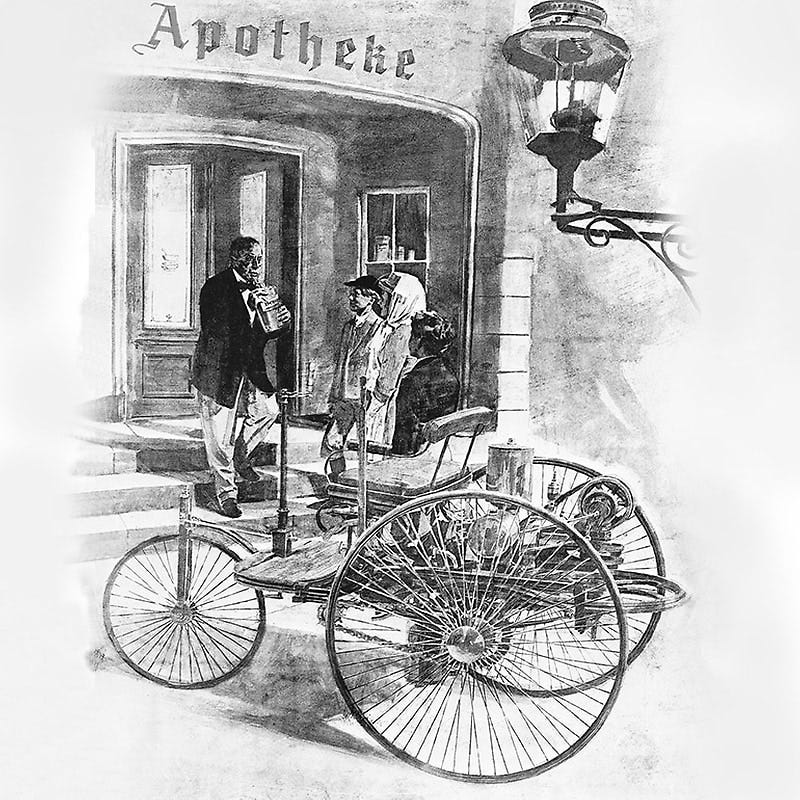

By definition, job-centric innovation is antithetical to the foundational principles of startups: solve big, hair-on-fire problems. Let’s say the year is 1850 and the problem statement is: how do we get from point A to point B as quickly and efficiently as possible? The status quo is by horse and buggy, where the horse is the “worker.” If we focus on optimizing the performance of the worker, we’ll make a faster horse, missing the opportunity to invent the car, or even the plane. Tackling the Faster Horse Problem requires us to be problem focused rather than worker-focused, and to embrace the emergent behaviors of what new technologies can offer – whether it’s a gas-powered engine or a large-scale AI model.

The Emergent Behaviors of Large Language Models

So what are emergent behaviors of a large-scale AI model, and why do they matter? Emergent behaviors are those that are not explicitly programmed, but arise from model training on large datasets. Much of society’s intrigue (and also worry) about ChatGPT thus far stems from its emergent behaviors and the disbelief that an AI model is capable of them.

In the research community, emergent behaviors are also referenced in the context of zero-shot learning, or the ability of the model to understand and carry out tasks without having received any specific training or examples on how to perform them on the first go. There is a significant body of ongoing research on how large-scale models perform on zero-shot learning tasks.

Rare disease diagnosis is a straightforward example of zero-shot learning in medicine. If a model is trained to identify common skin conditions but needs to classify a rare one like Xeroderma Pigmentosum, it can leverage its knowledge of related conditions from training data and information about Xeroderma Pigmentosum to make predictions, even though it hasn't seen this specific condition in the training data. The ability of the model to diagnose a never-before-seen disease arises from the complex interactions of the model's learned parameters applied in a novel way, rather than from direct, rule-based programming.

Acknowledging that ever-increasing model size and multi-modality will only accelerate the growth of emergent behaviors, we should refrain from over-indexing on what jobs AI will do, as AI will re-write the job descriptions altogether.

Instead, piecing together these behaviors as modular units of computation, we can produce entirely novel paradigms of user interface and human-computer interaction. We should build for the future in this way, without regard for the way humans and machines divide tasks today.

I’m reminded of the Steve Jobs quote:

“It’s really hard to design products by focus groups. A lot of times, people don’t know what they want until you show it to them.”

Taking stock of what’s possible today with GPT-4, here are 25 Emergent Behaviors of Large-Scale Models:

Redaction: Obscuring sensitive data from documents to maintain confidentiality.

Structuring: Organizing data into a specific format for better accessibility and analysis.

Abstraction: Extracting essential information from large data sets for key insights.

Generation: Creating new data or content, often for educational or training purposes.

Translation: Converting information into different languages or formats for wider accessibility.

Summarization: Condensing detailed information into brief, comprehensive overviews.

Imputation: Filling in missing values in data to complete datasets.

Reasoning: Drawing logical conclusions from available information to make decisions.

Interpolation: Estimating unknown data points within a series based on known values.

Prediction: Using data to forecast future events or trends.

Explanation: Providing reasons or justifications for decisions or outcomes.

Adaptation: Altering responses to suit changing conditions or data.

Personalization: Tailoring content or services to individual specifications or needs.

Intuition: Using insight to reach conclusions beyond the apparent data.

Analogizing: Comparing and relating similar concepts from different datasets or domains.

Synthesis: Combining varied pieces of data to create a unified understanding.

Contextual Understanding: Comprehending the broader implications of information within its background.

Sentiment Analysis: Evaluating the emotional tone of text to glean insights.

Disambiguation: Clarifying ambiguities in language or data for precise interpretation.

Commonsense Reasoning: Applying general knowledge logic to form practical conclusions.

Categorization: Identifying and classifying complex patterns—transforming raw data into categorized, actionable insights.

Optimization: Optimizing systems in ways humans might not envision for multiple objectives simultaneously.

Hypothesis Generation: Generating hypotheses by correlating disparate data points.

Anomaly Detection: Detecting anomalies that deviate from known patterns.

Dialogue Systems: Engaging in nuanced conversations that preserve context, remember past interactions, and anticipate needs.

Emergent behaviors reflect a paradigm shift in the role of AI from a tool that simply automates tasks to one that re-draws boundaries of interaction re-conceptualizes our understanding of work, innovation, and creativity. It's no longer about teaching AI to do what humans already do or what they can’t do; it's about re-authoring task construction in an AI-first world through problem-focused solutioning.

Embracing emergent behaviors and pointing them at previously intractable problems will allow us to move beyond the faster horse.

Thanks for reading,

Morgan

P.S. There probably exist many emergent behaviors I’ve failed to list because I or others have yet to discover them. Please share them as they become known!

Huge thanks to my pals Daniel Breyer, Michelle Wang, and Nikhil Krishnan, for providing feedback on drafts of this piece.